Introduction

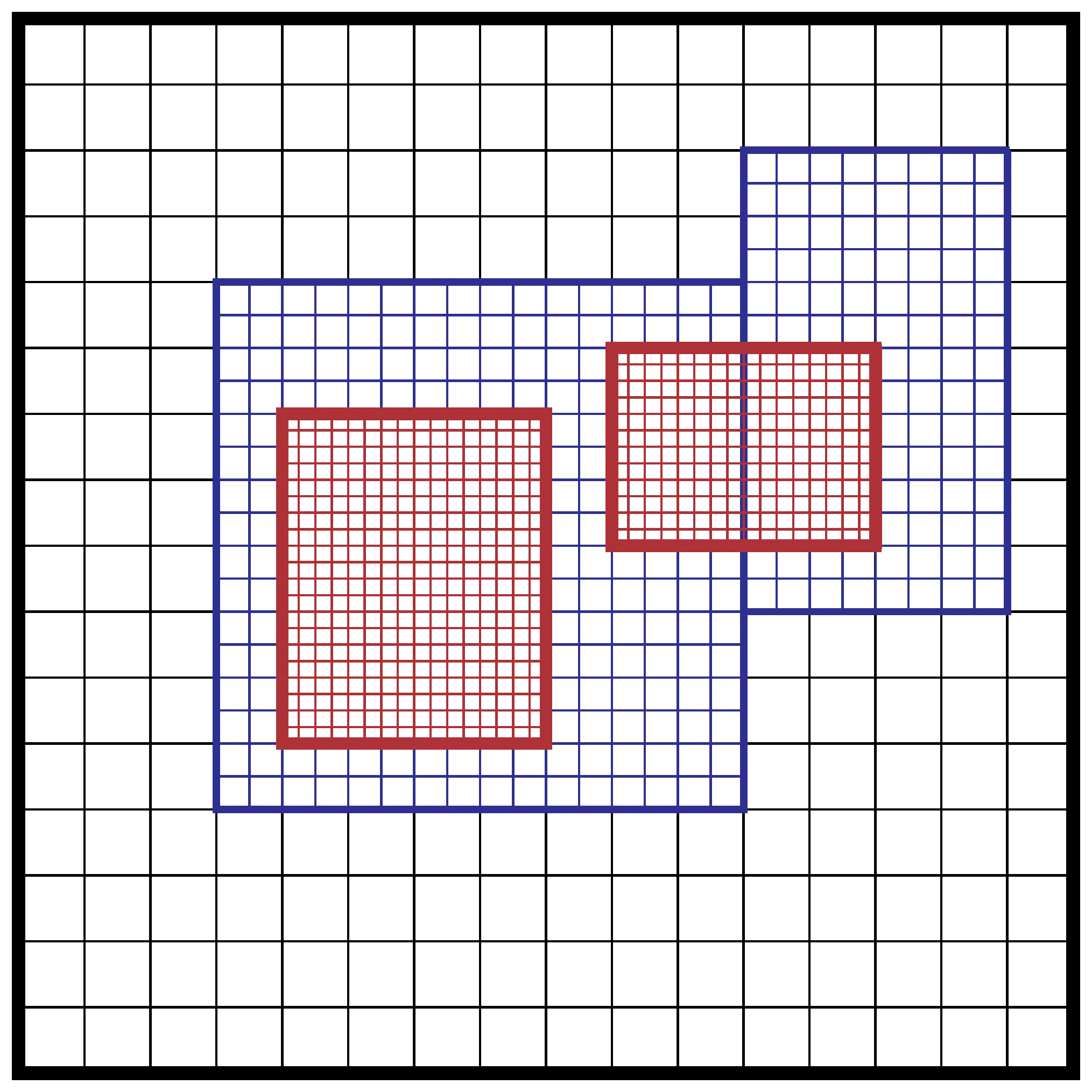

In numerical analysis, adaptive mesh refinement (AMR) is a method of adapting the accuracy of a solution within certain sensitive or turbulent regions of simulation, dynamically and during the time the solution is being calculated 1.

Tutorials

Here are some tutorials on adaptive mesh refinement (AMR) and related topics.

- Adaptive mesh refinement for hyperbolic partial differential equations - ScienceDirect

- [2112.10567] Lessons for adaptive mesh refinement in numerical relativity

- [2404.02950] Sledgehamr: Simulating Scalar Fields with Adaptive Mesh Refinement

I found two excellent mini-codes on GitHub that are very useful for learning AMR. They are simple and easy to understand.

AMR Libraries

Here is a list of AMR libraries, some of which are actively maintained and others that are not. I will try to keep this list updated.

- AMR Resources, a website of AMR software that uses the original Berger/Oliger/Colella adaptive mesh refinement software, by Donna Calhoun

- [1610.08833] A Survey of High Level Frameworks in Block-Structured Adaptive Mesh Refinement Packages

AMReX

AMReX is a software framework designed to accelerate scientific discovery for applications solving partial differential equations on block-structured meshes. Its massively parallel adaptive mesh refinement (AMR) algorithms focus computational resources and allow scalable performance on heterogeneous architectures so that scientists can efficiently resolve details in large simulations.

MPI-AMRVAC

MPI-AMRVAC is a parallel adaptive mesh refinement framework aimed at solving (primarily hyperbolic) partial differential equations by a number of different numerical schemes. The emphasis is on (near) conservation laws and on shock-dominated problems in particular. A number of physics modules are included; the hydrodynamics and the magnetohydrodynamics module are most frequently used. Users can add their own physics module or modify existing ones. The framework supports 1D to 3D simulations, in a number of different geometries (Cartesian, cylindrical, spherical).

SAMRAI

SAMRAI (Structured Adaptive Mesh Refinement Application Infrastructure) is an object-oriented C++ software library that enables exploration of numerical, algorithmic, parallel computing, and software issues associated with applying structured adaptive mesh refinement (SAMR) technology in large-scale parallel application development. SAMRAI provides software tools for developing SAMR applications that involve coupled physics models, sophisticated numerical solution methods, and which require high-performance parallel computing hardware. SAMRAI enables integration of SAMR technology into existing codes and simplifies the exploration of SAMR methods in new application domains.

Uintah

Asynchronous Many Task Framework for set of libraries and applications for simulating and analyzing complex chemical and physical reactions modeled by partial differential equations on structured adaptive grids using hundreds to hundreds of thousands of processors.

Parthenon

Parthenon — a performance portable block-structured adaptive mesh refinement framework

p4est

p4estis a C library to manage a collection (a forest) of multiple connected adaptive quadtrees or octrees in parallel.

Chombo (Not actively maintained)

Chombo provides a set of tools for implementing finite difference and finite volume methods for the solution of partial differential equations on block-structured adaptively refined rectangular grids. Both elliptic and time-dependent modules are included. Chombo supports calculations in complex geometries with both embedded boundaries and mapped grids, and Chombo also supports particle methods. Most parallel platforms are supported, and cross-platform self-describing file formats are included.

Clawpack

Clawpack is a collection of finite volume methods for linear and nonlinear hyperbolic systems of conservation laws. Clawpack employs high-resolution Godunov-type methods with limiters in a general framework applicable to many kinds of waves. Clawpack is written in Fortran and Python.

Trixi.jl

Trixi.jl is a numerical simulation framework for conservation laws written in Julia.

AMReX Resources

I personally find that AMReX is the best AMR library, featuring modern code infrastructure and a large community. I took some time to study it and have collected some resources here, which I hope will be useful for you as well.

AMReX Tutorials

- [2404.02950] Sledgehamr: Simulating Scalar Fields with Adaptive Mesh Refinement

- AMReX: Building a Block-Structured AMR Application ǀ Ann Almgren and DonaldWillcox, LBNL - YouTube

- AMReX - YouTube

- Adaptive Mesh Refinement: Algorithms and Applications - YouTube

ATPESC 2022 5.2a Structured Meshes with AMReX | Ann Almgren, Erik Palmer - YouTube

- ATPESC 2022 5.2a Structured Meshes with AMReX | Ann Almgren, Erik Palmer - YouTube

- ATPESC 2022 5.2b Unstructured Meshes with MFEM/PUMI | A. Fisher, M. Shephard, C. Smith - YouTube

- ATPESC 2022 5.3a Iterative Solvers & Algebraic Multigrid Trilinos/Belos/MueLu | C. Glusa, G. Harper - YouTube

- ATPESC 2022 5.3b Direct Solvers with SuperLU STRUMPACK | Sherry Li, Pieter Ghysels - YouTube

- ATPESC 2022 5.4a Nonlinear Solvers with PETSc | Richard Tran Mills - YouTube

AMReX Examples

- MSABuschmann/sledgehamr: Sledgehamr is an AMReX-based code package to simulate the dynamics of coupled scalar fields on a 3-dimensional adaptive mesh.

- EinsteinToolkit/CarpetX: CarpetX is a Cactus driver for the Einstein Toolkit based on AMReX

- GitHub - AMReX-Astro/Castro: Castro (Compressible Astrophysics): An adaptive mesh, astrophysical compressible (radiation-, magneto-) hydrodynamics simulation code for massively parallel CPU and GPU architectures.

- GitHub - Exawind/amr-wind: AMReX-based structured wind solver

- GitHub - ECP-WarpX/WarpX: WarpX is an advanced, time-based electromagnetic & electrostatic Particle-In-Cell code.

- GitHub - GRTLCollaboration/GRTeclyn: Port of GRChombo to AMReX - under development!

- GitHub - AMReX-Astro/STvAR: Space-Time Variable Code Generator and Solver

- [2210.17509] GRaM-X: A new GPU-accelerated dynamical spacetime GRMHD code for Exascale computing with the Einstein Toolkit